Mdadm is Linux Based software that allows you to use the Operating System to create and handle RAID arrays with SSDs or normal HDDs. In general, software RAID offers very good performance and is relatively easy to maintain. I've personally seen a software RAID 1 beat an LSI hardware RAID 1 that was using the same drives. A lot of software RAIDs performance depends on the CPU that is in use. Jul 15, 2008 Benchmarking hardware RAID vs. Linux kernel software RAID. On the software RAID side, the ext3 configuration is the same as the ext3alignwb hardware configuration, the plain XFS option the same as hardxfsalign, and the xfslogalign the same as the xfsdlalign hardware configuration. Apr 28, 2017 Software RAID 5 on Linux is the best RAID. It's cheaper than hardware RAID with a proper 400$ controller. It has better speed and compatibility than the motherboard's and a cheap controller's FakeRaid. And it's easy; we just need three HDDs and a few hours. Let's make a software RAID 5 that will keep all of our files safe and fast to access.

- Linux Software Raid 5 Performance

- Linux Raid 5

- Linux Software Raid Performance System

- Linux Software Raid Vs Hardware Raid Performance

- Linux Software Raid 10

- Linux Software Raid Performance Free

Jun 12, 2019 Steps to configure software raid 5 array in Linux using mdadm. RAID 5 is similar to RAID-4, except the parity info is spread across all drives in the array. Skip to content. Software RAID 0 Configuration in linux RAID is one of the heavily used technology for data performance and redundancy. Based on the requirement and functionality they are classified into different levels. Selecting a level for your requirement is always dependent on the kind of operation that you want to perform on the disk. Mar 04, 2008 Contains comprehensive benchmarking of Linux (Ubuntu 7.10 Server) software RAID as well as FreeBSD software RAID (and some nVidia 'fake raid' as well. Linux Software RAID. In contrast with software RAID, hardware RAID controllers generally have a built-in cache (often 512 MB or 1 GB), which can be protected by a BBU or ZMCP. With both hardware and software RAID arrays, it would be a good idea to deactivate write caches for hard disks, in order to avoid data loss during power failures.

By Ben Martin

In testing both software and hardware RAID performance I employed six 750GB Samsung SATA drives in three RAID configurations — 5, 6, and 10. I measured performance using both Bonnie++ and IOzone benchmarks. I ran the benchmarks using various chunk sizes to see if that had an effect on either hardware or software configurations.

For the hardware test I used an $800 Adaptec SAS-31205 PCI Express 12-port hardware RAID card. For software RAID I used the Linux kernel software RAID functionality of a system running 64-bit Fedora 9. The test machine was an AMD X2 running at 2.2GHz equipped with 2GB of RAM. While a file server setup to use software RAID would likely sport a quad core CPU with 8 or 16GB of RAM, the relative differences in performance between hardware and software RAID on this machine should still give a good indication of the performance differences to be expected on other hardware. I tested against about 100GB of space spread over the six disks using equal-sized partitions. I didn’t use the entire available space in order to speed up RAID creation.

Because the motherboard in the test machine had only four SATA connectors, I had to compromise in order to test six SATA drives. I tested a single drive on the motherboard SATA controller and then as a single disk exported through the Adaptec card so that I could see the performance difference that the Adaptec card brings to single disk accesses. I then attached the six disks to the Adaptec card and exported them to the Linux kernel as single non-RAIDed volumes. I tested software RAID by creating a RAID over these six single volumes accessed through the Adaptec card. One advantage of using six single disk volumes through the Adaptec card is that it makes the comparison a direct one between using the hardware RAID chip and using the Linux kernel to perform RAID — the SATA controller that the drives are attached to is the same in both tests. The benchmark between using the motherboard and the Adaptec card SATA controllers to access a single disk gives an indication of how much difference there is in the SATA controller for single disk access. As a minor spoiler, there isn’t a huge advantage for either controller for single disk accesses, so the figures of accessing the six disks individually and running software RAID on top should generalize to other SATA controllers. Knowing the performance differences of using the Adaptec card to access a single volume as opposed to the onboard SATA controller you can focus on the difference between hard and soft RAID benchmarks.

I ran tests using both the ext3 and XFS filesystems. These filesystems were created to be chunk and stride aligned to the RAID where possible. I used the mount-time options writeback,nobh and nobarrier for ext3 and XFS respectively. It seems that using write barriers is extremely costly in terms of a time penalty on the Adaptec card, so running it with UPS and battery backup to protect the metadata instead of using write barriers is wise if you plan on using XFS on it. In addition to the alignment options, I used lazy-count=1 to create the XFS filesystem, which allows less write contention on the filesystem superblock.

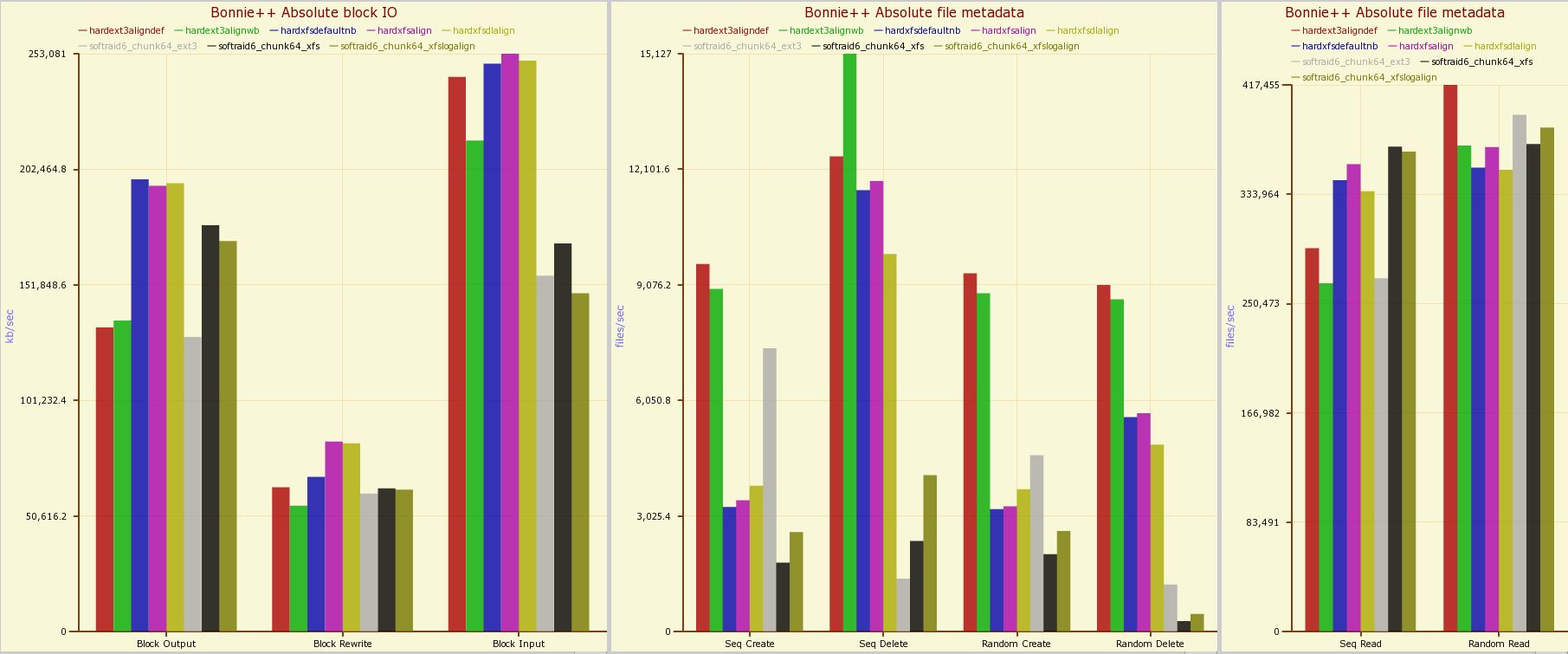

In order to understand the legends in the graphs, you have to know about the manner in which the filesystems were created. Hardware RAID configurations all have the prefix “hard.” hardext3aligndef is ext3 created with -E stride and mounted without any special options. hardext3alignwb is the same as hardext3aligndef but mounted with the data=writeback,nobh options. The XFS filesystem for xfsdefaultnb was created with lazy-count=1 and mounted with nobarrier. hardxfsalign is the same as xfsdefaultnb but is stride- and chunk-aligned to the particular RAID configuration. xfsdlalign is the same as hardxfsalign, but I made an effort to stripe-align the XFS journal as well. On the software RAID side, the ext3 configuration is the same as the ext3alignwb hardware configuration, the plain XFS option the same as hardxfsalign, and the xfslogalign the same as the xfsdlalign hardware configuration.

As you will see from the graphs, the alignment of the XFS filesystem log to stripe boundaries can make a substantial difference to the speed of filesystem metadata operations such as create and delete. The various configurations are also included on the block transfer graphs because these filesystem parameters can affect block transfer rates as well as metadata figures. I didn’t discuss the metadata operation benchmarks explicitly throughout the article but have included them in the graphs. One trend that is hard to miss is that hardware RAID makes ext3 random create and delete operations substantially faster.

Single disk performance

Neither the Adaptec nor the onboard SATA controller is a clear winner for block accessing a single disk. The Adaptec card is a clear winner in the file metadata operations, probably due to the 256MB of onboard cache memory on the controller card. It is interesting that block input is actually higher for the onboard SATA controller than the Adaptec. Of course, the Adaptec card is not really designed for accessing a single disk that is not in some sort of hardware RAID configuration, so these figures should not be taken as an indication of poor SATA ports on the card. Because the Adaptec card does not offer a clear advantage when accessing a single disk, using the Adaptec controller to expose the six disks for software RAID should not provide an unfair advantage relative to other software RAID setups.

RAID-5 performance

The main surprise in the first set of tests, on RAID-5 performance, is that block input is substantially better for software RAID; ext3 got 385MB/sec in software against 330MB/sec in hardware. The hardware dominates in block output, getting 322MB/sec aginst the 174MB/sec achieved by software for aligned XFS, making for a 185% speed increase for hardware over software. These differences in block output are not as large when using the ext3 filesystem. Block rewrite is almost identical for ext3 in software and hardware, whereas the rewrite difference becomes 128MB/sec to 109MB/sec for hardware to software respectively when using XFS.

Linux Software Raid 5 Performance

In situations where you want to run RAID-5 and block output speed is as important as your block input speed, using XFS on a hardware RAID is the choice. In cases where rewriting data is a frequent operation, such as running a database server, there can be more than 15% speed gain to using a hardware RAID over software.

RAID-6 performance

The SAS-31205 Adaptec card supports chunk sizes up to 1,024KB in hardware. For the RAID-6 performance tests I used 64KB, 256KB, and 1,024KB chunk sizes for both hardware and software RAID.

Shown below is the graph for RAID-6 using a 256KB chunk size. The advantage that software RAID had in terms of speed have evaporated, leaving all block input fairly even across the board. The graph for the block output and rewrite performance retain the same overall structure but are all slower. The XFS block output performance becomes 255MB/sec for hardware and 153MB/sec for software in RAID-6.

Linux Raid 5

Using RAID-5 leaves you vulnerable to data loss, because you can only sustain a single disk loss. When a single disk goes bad, you replace it with another and the RAID-5 begins to incorporate the new disk into the RAID array. This is a very disk-intensive task can last for perhaps five or six hours, during which time the remaining disks in the RAID are being heavily accessed, raising the chances that any one of them might fail. Because RAID-6 can sustain two disk failureswithout losing data, you can sustain a second disk failing while you are already replacing a disk that has failed.

The output figures will be lower in RAID-6 than in RAID-5 because two blocks of parity have to be calculated instead of the one block that RAID-5 uses. The block output figures for using XFS on hardware RAID-6 are impressive. Once again, for rewrite intensive applications like database servers there is a noticeable speed gain to running RAID-6 in hardware.

Blue bossa guitar chords. Blue Bossa Kenny Dorham. Title: Blue Bossa.pdf Author: Flavio Goulart de Andrade Keywords: UNREGISTERED Created Date: 9/23/2008 7:19:05 PM. Jan 25, 2017 Print and download in PDF or MIDI Blue Bossa. Blue bossa is a latin jazz standard. This arrangement is for a jazz combo / the insturment can be be change the brass and woodwind are all bflat so you can change the instuemtns easily for what you want / the clarient can also be a 2nd trumpet or tenor saxaphone / the tenor saxaphone can be a trombone all you have to do with the switching of.

The difference between write performance shows up on the IOzone graph for 256KB chunk RAID using XFS. Note also that the write performance for hardware RAID is better across the board when using larger files that cannot fit into the main memory cache. The fall-away in hardware RAID performance for smaller files is also present in the RAID-10 IOzone write benchmark.

Shown below is the graph for RAID-6 using a 64KB chunk size. Using the smaller chunk size makes block output substantially faster for software RAID but also more than halves the block input performance. From the block transfer figures there doesn’t seem to be any compelling reason to run 64KB chunk sizes for RAID-6.

Free Audio Converter from DVDVideoSoft allows you to convert audio files to different output formats so that you can play them through various devices and programs. Audio file converter free download. You have the option to convert the files to MP3, M4A, FLAC, ALAC, AMR, WAV, WMA, and more, as well as being able to select the output quality with options including: Original, LAME Insane, LAME Extreme, LAME Standard, High Quality, and Old Standard.Thanks to the Batch conversion feature, you can convert as many files as you want - as long as you are converting them to the same output.

Shown below is the performance of RAID-6 using a 1,024KB chunk size. The performance of the hardware RAID is about 10-15MB/sec better for rewrite from the software RAID’s 55-60MB/sec total. Other than that, using a 1,024KB chunk size removes the block output advantage that the RAID card had for the 256KB chunk size RAID-6.

RAID-10 performance

Linux Software Raid Performance System

For the RAID-10 performance test I used 256KB and 1,024KB chunk sizes and the default software RAID-10 layout of n2. The n2 layout is equivalent to the standard RAID-10 arrangement, making the benchmark a clearer comparison.

Shown below is the 256KB chunk size graph. For block input the hardware wins with 312MB/sec versus 240MB/sec for software using XFS, and 294MB/sec for hardware versus 232MB/sec for software using ext3. Given that block output was fairly close and that there are no parity calculations required for RAID-10, I would have expected the block rewrite differences between software and hardware to be closer than they are. It is a little surprising that even without the burden of calculating parity information, rewrite is substantially faster for hardware RAID-10 than software. So once again, if you intend to run an application that demands rewrite performance such as a relational database server, consider spending extra for a hardware card.

When using a 1,024KB block size there is little difference in any block transfer speeds between hardware and software RAID. There is an anomoly with the stride-aligned XFS through the hardware RAID-10 causing a loss of about 50MB/sec in block input performance.

Note that although rewrite performance is very close across the board for hardware and software RAID-10 using a 1,024kb chunk size, the fastest 1,024KB rewrite is 99.5MB/sec, whereas the using hardware 256KB chunks and XFS allows 116MB/sec performance. So for database servers, the 256KB chunk size with a hardware RAID is still the fastest solution. If you are mostly interested in running a file server, the differences in block input and output speeds between a software RAID-10 using a 1,024KB chunk size (288 MB/sec in / 219 MB/sec out) and a hardware RAID-10 using a 256KB chunk size (312 MB/sec in / 243 MB/sec out) are not likely to justify the cost of the hardware RAID card. I chose these chunk sizes for the comparison because they represent the best performance for software (1,024) and hardware (256) RAID-10.

Wrap up

Linux Software Raid Vs Hardware Raid Performance

For RAID-10 using a smaller block size such as 256KB you get a noticeable difference between hardware and software in rewrite performance. Hardware beats out software substantially in output for parity RAID, with hardware being 66% faster for block output and 20% faster for rewrite performance in a 256KB chunk RAID-6. Therefore if you want performance and the capability to sustain multiple drive failure at the same time you should investigate hardware RAID solutions. Aside from the benchmarks, the hardware RAID card also supports hot-plug replacement, mitigating downtime to swap out dead drives. Based on these test results you might consider buying some cheaper SATA ports and stripe two software RAID-6 arrays instead of buying a hardware RAID card with the same budget.

Linux Software Raid 10

My tests showed that the choice of filesystem makes a huge impact on performance, with XFS being substantially quicker for output on parity RAIDs, though in some configurations, such as 256KB-chunk RAID-10 block input, XFS can be slower than ext3.

Linux Software Raid Performance Free

It would be interesting to extend testing to include other filesystems to see which filesystems performed best for mainly read or write performance. The Adaptec hardware is capable of running RAID-50 and -60. It would be interesting to see how software RAID compares at this higher end, perhaps running a RAID-50 on a 16-port card using four RAID-5 arrays as the base.